Autonomous handling system in an open environment

Abstract: The tasks in the outdoor open world environment are now mature and can be automated by moving robotic arms. The dynamic, unstructured and unknown environment associated with such tasks-a classic example of collecting roadside garbage-makes them particularly challenging. In this article, we propose a solution for picking up, transporting and dropping new objects outdoors. Our solution integrates a navigation system, a grip detection and planning system, and a custom task planner. Our experiments show that the system can be used to transport a variety of novel items (garbage bags, general garbage, gardening tools and fruits) outdoors in an unstructured environment with a relatively high 85% end-to-end success rate. .

Introduce

In many cities, garbage bags usually pile up within a week, waiting to be picked up. Farm workers must often pick up and carry heavy tools every day. Construction workers spend a lot of time transporting materials through construction sites. These are labor-intensive tasks that can benefit from the manipulation of mobile robots. Research on these robots has been growing, but there are still major challenges in dealing with the uncertain characteristics of many outdoor operating environments. Some recent work does use mobile robotic arms in outdoor scenes: using crosswalks and traffic lights, walking around the campus to drink coffee [3] or working in an entire solar power plant [4]. However, none of these systems can handle outdoor pick-and-place tasks involving novel objects. This article explores what can be done in this area by integrating the latest mastering and navigation methods and software.

We focus on the problem of picking and placing novel objects in an open world environment. The only input to our system is the pick-and-place point selected by the operator using the map of the previously explored area. Once these points are identified, the robot will navigate to the pick location, pick up everything found here, then transport it and place it in the dump location where it is placed. Our main contributions are as follows. First, we describe a method of automatically navigating to these points. We propose two transportation strategies to solve this task: collect all the goods, and collect the goods one by one. Secondly, it describes a method of selecting the grip in order to make the selection without making any assumptions about the object. Finally, the outdoor mobile manipulator is described in detail (Figure 1). We described the characteristics of the navigation and grabbing system, and reported on different objects (garbage bags, general garbage, gardening tools and fruits)

The success rate and time of the four transportation tasks completed.

Figure 1. A mobile robotic arm for transportation in an open environment. It includes a mobile unmanned vehicle (Warthog), a robotic arm with mechanical claws (UR10) (Robotiq 85), a set of cameras (Intel RealSense D415) and a laser sensor (SICK LMS511)

Robot hardware

The mobile chassis is Clearpath Warthog, with a payload of 276kg and dimensions of 1.52 x 1.38 x 0.83 (m). Since the default setting caused us steering problems, we released the rear wheel and placed it on the casters, which will be converted to a differential drive system. Although this increases our turning radius by 0.43m and adds some non-linearity to the steering dynamics, it allows us to operate the system autonomously.

The robotic arm is Universal Robots UR10, which has 6 degrees of freedom (DoF) and a payload of 10kg. The end effector is Robotiq 2-Finger 85 gripper, with a payload of 5kg and a maximum aperture of 85mm. The arm is installed on the front of the warthog, and there is enough space around it to rotate without collision, so it can pick up objects from the floor and the basket on the top of the warthog. The basket (51.0 x 60.5 x 19.5 (cm)) is used to hold the grasped objects so that they can be transported to the placement location. We also installed the UR10 control box and a PC on the top, which runs the higher-level processing of the system.

For perception, we used three Intel RealSense D415 depth cameras. Two of them are fixed on both sides of the front of the robot, pointing downwards to cover the target pickup area (see Figure 1). The third is mounted on a gripper configured as a hand-eye camera. These cameras combine structured light sensors with stereo vision to generate point clouds from deep and work outdoors even in moderately bright sunlight. The main sensor used for vehicle positioning is the front-mounted single-line SICK LMS511 lidar with a field of view of 190°.

System structure

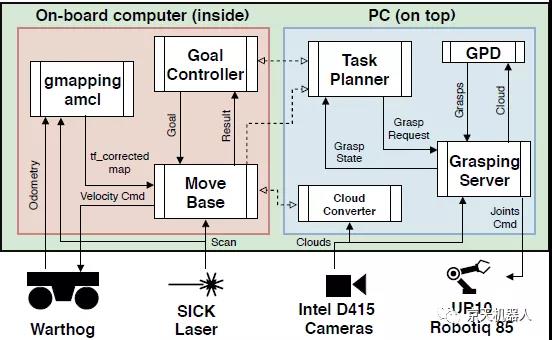

Our system is developed using Robot Operating System (ROS). The three main parts are: navigation module, grab module and task scheduler (Figure 2). Considering their computing requirements, we divided the system into two computers: 1) the onboard PC in the Warthog, which runs all navigation stacks, and 2) the PC installed on the top, which runs the grab node and task plan Device.

Figure 2. The main interactions and components of the implemented architecture. The dotted line is the ROS message shared between roscore

Figure 3. After configuring the ROS navigation package, the RViz visualization of the robot's automatic positioning on a part of the generated map

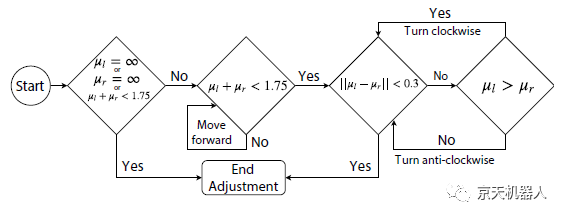

Figure 4. The process of adjusting the final posture of Warthog

Figure 5. (Left) UR10 and the grip seen in RViz when picking up objects from the basket, (right) Wrist posture used to check the hand-eye camera

Figure 10. The sequence of actions taken by the robot during the test: move to the pick-up point, pick up the object from the floor, place it in the basket, move to the drop point, record three views from the basket, and grab the object from the basket at the end Put it in the collection box

Conclusion and Outlook

This article describes a system that solves open world transportation tasks involving novel objects. After providing users with pick and place points, our system automatically navigates to the pick point, grasps all the content there, navigates to the place point, and then puts all the content in the trash can. We evaluated the system in four experimental scenarios involving the following different objects: garbage bags, garbage, tools, and fruits. Experiments show that our system runs relatively well, with a grabbing success rate of 80.8%, a navigation success rate of 96.1% without problems, and an overall mission success rate of 85.7%.

As a future work, we hope to reduce the time interval from calculation to actual implementation. Since robots work in an open environment, there are some factors that affect the position of objects, like they may move with the wind=. In addition, it is best to include a 3D sensor to improve self-positioning. Finally, we want to work on an object detection and tracking system so that the robot can automatically find the target object.

Original paper:

Zapata-Impata B S, Shah V, Singh H, et al. AutOTranS: an Autonomous Open World Transportation System[J]. arXiv preprint arXiv:1810.03400, 2018.

Video results display

The text still can’t feel the actual charm of the cleaner robot. Let’s experience the mobile grabbing application firsthand through the actual video screen:

Click the link to watch the video:https://mp.weixin.qq.com/s/H7cRRLtNCmyNuPxWBa36oQ

The application described above can help us collect garbage in the trash can, so how to move the trash can to the garbage transfer truck? Thirty-five students from Chalmers University of Technology, Meradalen University and Pennsylvania State University jointly completed the project of accurately transporting trash cans to trash transfer trucks through the Cleanapth mobile robot Husky. We use the following project introduction video to understand how they can use robots to complete such a difficult task.

Garbage transfer

Click the link to watch the video:https://mp.weixin.qq.com/s/H7cRRLtNCmyNuPxWBa36oQ

Do you think this application is very cool? Do you want to implement such an application? Welcome to leave a message. You only need a Clearpth unmanned vehicle + UR robot arm to help you complete the cutting-edge applications of various robot substitutions. Please act quickly.

The current domestic epidemic situation continues to improve, and the urban area of Wuhan has also changed from a high-risk area to a medium-risk area. At the center of the epidemic, we can also get out of the community to breathe fresh air. However, the epidemic in countries outside of China is spreading rapidly. The mobile scraping application shared today may help countries that are fighting the new crown pneumonia to better clean up domestic garbage and medical waste, and reduce the risk of infection. I wish the world can work together to defeat the new crown pneumonia epidemic at an early date.

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event