The editor recently saw an excellent paper by ICRA in 18 years. After reading it, I felt very inspired, and I would like to share it with you. The title of the paper is: MaestROB: A Robotics Framework for Integrated Orchestration of Low-Level Control and High-Level Reasoning. It shows how to use the MaestROB framework in complex scenarios where multiple participants (personnel, communication robots, and industrial robots) collaborate to perform common industrial tasks.

Summary

This article introduces a framework called MaestROB. It aims to enable the robot to perform complex tasks with high precision through simple high-level instructions given by natural language or demonstration. To achieve this, it handles the hierarchy by using knowledge stored in the form of ontology and rules for bridging between instructions at different levels. Therefore, the framework has multiple layers of processing components. The underlying perception and drive control, the symbol planner and Watson API for cognitive and semantic understanding, and a new open source robot middleware called Project Intu, orchestrate these components. We showed how to use this framework in complex scenarios where multiple participants (people, communication robots, and industrial robots) collaborate to perform common industrial tasks. Humans use natural language dialogues and demonstrations to teach Pepper (a humanoid robot from Japan's SoftBank) assembly tasks. Our framework can help Pepper perceive human presentations and generate a series of actions for UR5 (Universal Robots' collaborative robot arm), and finally perform assembly tasks.

introduction

Making robot programming feasible for beginners and easy for experts is the key to using robots in industrial production and assembly beyond the scope of conventional applications. Tomorrow's industrial and communication robots will understand natural interfaces such as voice and human presentations to learn complex skills. These robots will be powered by many smaller cognitive services, rather than components like a single brain, and these services will coordinate their work to show a higher level of cognition. Each service in the system will display basic intelligence to effectively perform a clearly defined task. The connection and orchestration framework of these services will be the key to building a truly intelligent system. Although often used interchangeably, we use the term "cognition" instead of "machine learning" or "artificial intelligence" to give a concept similar to human intelligence across multiple fields. Generally, the term artificial intelligence is widely used, while machine learning usually refers to systems that solve different problems in a single field.

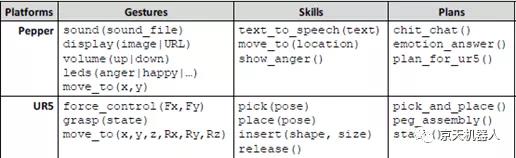

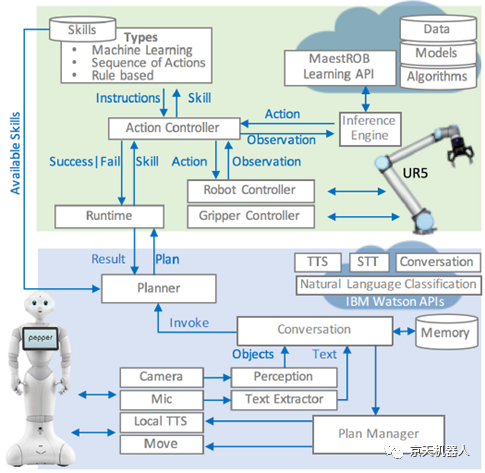

In order to achieve a higher level of cognitive ability, in this article, we propose a robot framework-MaestROB. The different components of MaestROB communicate through a new robot middleware called Project Intu. Intu is an open source project located at https://github.com/watson-intu/self. Intu uses the IBM Watson API to provide seamless access to many services, including conversations, image recognition, etc. With Intu as the core, MaestROB introduces a hierarchical structure for planning by defining several levels of instructions. By using knowledge and ontology of physical constraints and relationships, these abstractions can make human instructions the basis of operable commands. The framework performs symbolic reasoning at a higher level, which is very important for long-term autonomy and makes the entire system accountable for its actions. Allow individual skills to use machine learning or rule-based systems. We provide a mechanism to extend the framework by developing new services or connecting them with other robot middleware and scripting languages. This allows the use of PDDL (Planning Domain Definition Language) planners and proposed extensions to process semantics for higher-level reasoning, while lower-level skills can be executed as ROS (Robot Operating System) nodes. In MaestROB, the original intelligence of each component is coordinated to demonstrate complex behavior. Important functions of MaestROB include but are not limited to cloud-based skills acquisition and sharing services, mission planning with physical reasoning, perception services, the ability to learn from presentations, multi-robot collaboration, and teaching through natural language.

We demonstrate the function of the framework by demonstrating a scenario where someone teaches a task to a communication robot (Pepper). The robot understands the task and cooperates with the industrial manipulator (UR5) to perform the task using the action primitives previously acquired by UR5 through learning or programming. Industrial robots have the ability to perform physical operations, but lack key sensors that can help in specific situations (for example, error recovery, etc.). Using the planning and collaboration services provided by MaestROB, communication robots with these sensors can analyze the plan and convert it into a sequence of commands for a robotic arm. The learning function of the framework and the hierarchical control function of the middleware help to easily realize these tasks without any explicit programming.

Related work

Middleware is used to glue the various components of the robot together and communicate between them. Robot Operating System (ROS) is arguably the most commonly used robot middleware, especially in the research community. Although ROS provides a collaborative backbone structure for many nodes, it does not essentially provide any components that help plan or train robots to perform tasks.

Most of the custom learning and control solutions used in existing research cannot be promoted. The proposed framework is an effort to provide a general framework that can handle all these situations and beyond without any extra work. Friends who are interested in related work can refer to the original text below.

与此原文有关的更多信息要查看其他翻译信息,您必须输入相应原文

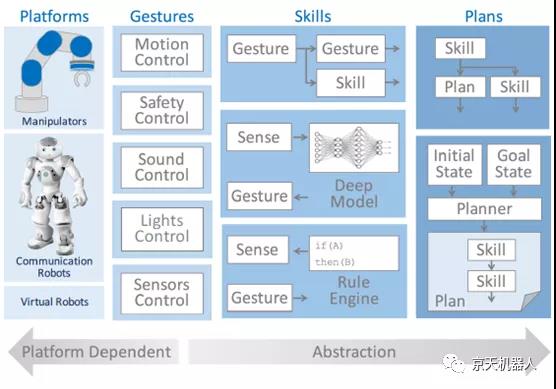

Figure 1: Different levels of instructions in MaestROB

Motivation and concept

The motivation for proposing MaestROB is to create a framework to perform precise physical operations in the real world by watching demonstrations or accepting natural language instructions. Humans communicate at a higher level of abstraction and assume basic knowledge. On the other hand, the machine cannot accurately understand these vague instructions. In order to enable the robot to understand natural language commands in a predictable way, we propose a hierarchy of instructions. Figure 1 shows the different levels of instructions in MaestROB, namely gestures, skills and plans. Gestures are platform dependent, but as we move to higher-level instructions, abstractions will be provided. Examples of instructions at different levels are given in Table I.

Table I: Examples of instructions at different levels

A. Gesture

Gestures are used to directly control the robot. They are equivalent to the motor skills of the human body. Gestures are performed by the base platform or robot controller. Depending on the platform, some gestures may not be available.

B. Skills

The next level of abstraction is skills. We define a skill as a logic that can consume sensor input and generate a list of gestures. Skills are atomic operations that perform part of all tasks. However, the skill cannot be performed alone. MaestROB provides three ways to teach new skills to robots:

1) List of gestures or skills: In the simplest form, a skill consists of a sequential or parallel list of gestures or other skills. For example, a grasping skill can select a grasping position and perform a series of gestures by continuing to advance at the top of the position, then descending, and finally closing the grasper.

2) Rule-based: In this method, a set of rules can be defined to consume sensor input and issue appropriate gestures. Rule-based skills are simply defined as the "iffAgthenfBg" rule. An example is to stop the robot if the end effector position is outside the predefined safety zone.

3) Machine learning: Some skills are too difficult to define by programs. MaestROB provides a cloud-based learning API that can be used to learn complex skills, such as different shape insertion tasks or visual servoing. Learning services support supervised learning and reinforcement learning paradigms. Inference is done locally to meet the real-time constraints of the robot, while the model is stored and learned in the cloud. In this way, the robot with the smallest processing power can also learn new skills by making REST calls to the MaestROB learning API. The API also supports model transfer learning from the simulator to the real environment.

MaestROB provides an interface for extending the framework by implementing other methods of defining or learning new skills. A skill can take one or more parameters as its input, such as place (pose), moveTo (pose, pose). According to the actual execution result of the skill within the specified time, the success or failure of all skills returned.

C. Planning

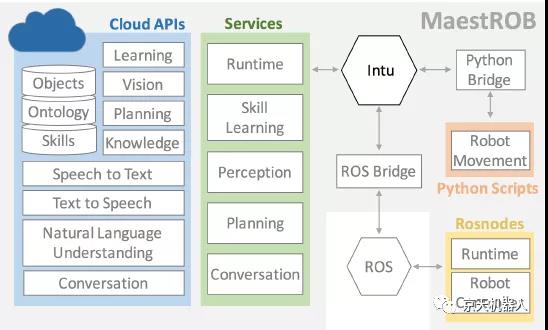

Conceptually, planning is a high-level abstraction from a given initial state to a target state. It consists of a series of skills defined, learned or calculated by the symbol planner. The plan corresponds to a single instruction in the user manual or a single command issued by a human being. The planner's role in the framework is to act as a bridge between cognitive semantics and skills. The framework currently provides three methods for defining new plans.

1) List of skills or plans: A plan can be defined as a parallel or sequential list of skills or other plans defined programmatically or through natural language communication using a fixed grammar. This method is inspired by the previous work of J. Connell et al. For example, we can tell the robot that he can learn to wave his hand by raising his right hand and moving left and right several times.

2) Learning from demonstration or dialogue: The plan can also be described by defining the final or target state verbally or programmatically. MaestROB provides a mechanism to place natural language commands in the desired target state by using natural language classification and mapping. Another method supported by the framework is to display the key state of the system instead of explicitly giving the initial state and target state. People only need to look at the initial state and final state of the task to understand the sequence of operations they need to perform. By using perception and relationship extraction, planners can use available skills to help robots perform the same operations. Before calculating and executing the plan, the framework checks the current state of the world to understand the initial state.

Similar to skills, we define an interface to implement other planning methods. Planning is a logic that can produce a series of one or more skills. All plans return success or failure.

Unlike skills, plans can be executed independently. It can contain a skill, a list of skills, or a dynamically calculated skill based on a planner or similar system. The plan can be started by manual instructions to respond to certain conditions, or the plan can be started in a continuous cycle. In the event of a skill failure, the goal can be achieved by using the current state as the initial state to generate a new plan. However, if the planner cannot find a suitable plan, manual intervention may be required.

Figure 2: MaestROB framework

Figure 3: MaestROB planner

Achievement display

In the demo scene, two robots (UR5 and Pepper) perform tasks in collaboration with humans. MaestROB is versatile enough to handle communication robots and industrial manipulators at the same time. We showed how different robots can use their own advantages to achieve common goals.

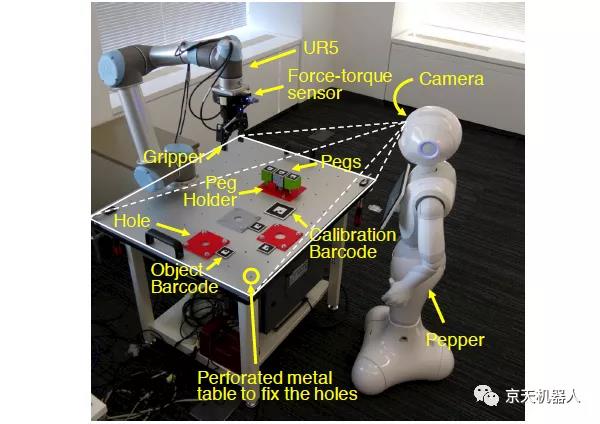

Pepper is a humanoid robot from Japan's Softbank, with multiple sensors, including vision. It is movable, but lacks a powerful grabber and cannot perform precise physical operations. On the other hand, UR5 (Universal Robots' collaborative robot arm) is a fixed industrial-grade manipulator robot. UR5 can perform high-precision and repeatable (0.03mm) physical operations, but due to the lack of visual sensors, it will move blindly. In the demonstration scenario, the human is responsible for performing the demonstration, controlling the robot, and making the final decision. The setup of the two robots used for the demonstration is shown in Figure 5. If the required gestures are available, the demonstration can also be run on other robotic platforms.

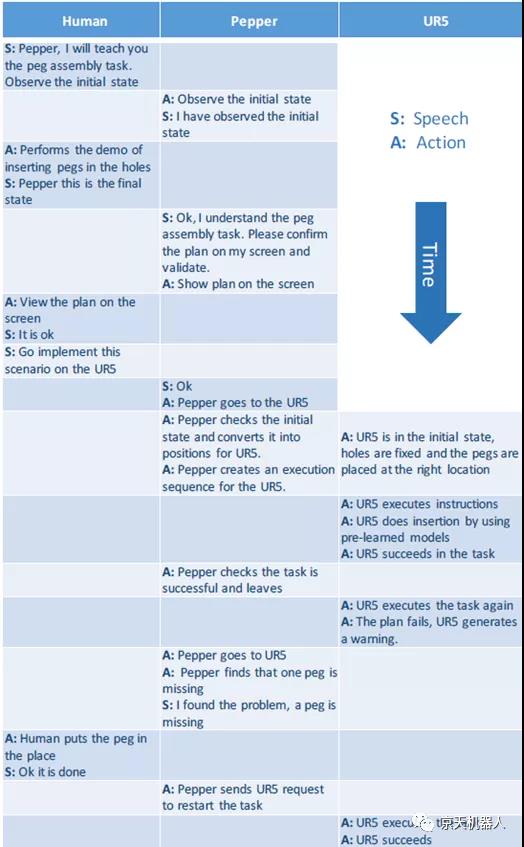

Table II shows the detailed plan and the operations performed by the different participants. One of the advantages of MaestROB is the connection to the IBM Watson API. In this way, a complex dialogue system can be easily realized. The command base of fluent natural language converted into complex but predictable sequence of actions proves the advantages of MaestROB.

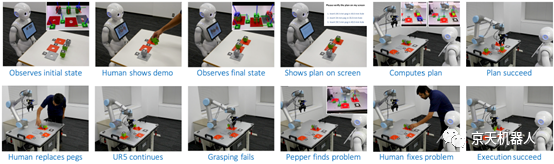

The demonstration starts with a person performing a task and talking to the Pepper robot at the same time. By using the conversation service to understand the intent of the command, the robot can understand when to capture the key frame and when the presentation ends. In the example, the robot recorded the initial state and final state of the demonstration. Through dialogue, it will remember the name of the task it is learning to perform (assembly task). It also understands that the final state is the last frame of the presentation. The initial and final frames will be sent to the perception service, which uses barcode pose detection to detect the position of all barcodes. The barcode number of each part and the conversion between the barcode and the object are separately defined in the object database. The state of the final frame is determined by the relational extractor. Since the task domain to be inserted is predefined, the appropriate ontology and relational database will be loaded.

Table II: Step-by-step demonstration scenarios. Subjects are human, Pepper and UR5

In this case, Pepper will not use the target to calculate for itself, but Pepper will implement the solution on UR5. Pepper uses the demo table and UR5's predefined positions to move from one position to another. When Pepper reaches UR5, it will capture an image of the initial state. This image is used to calibrate the Pepper camera with the UR5 robot, and it can also calculate the barcode position of all objects. Pepper uses the initial state observed from the image and the target state learned from the human demonstration to generate an executable plan for the manipulator robot. However, before that, the UR5 skills database must be shared with Pepper. The planner uses the common sense ontology to check whether the operation is allowed. For example, it is not allowed to insert large items into smaller holes. After the plan was sent to UR5, it began to execute tasks based on position control. Once the task is successful, Pepper can return, and UR5 continues to perform the task. In this demonstration, we have manual help to restore the nail to its original state before each iteration of UR5. In a factory environment, this is usually done by conveyor belts or other machines.

Figure 5: Pepper uses its camera to analyze the position of the object, creates a plan and sends it to UR5

Unless something goes wrong, UR5 will continue to execute the task. In this case, UR5 will warn of the failed plan. In this case, Pepper returns to the manipulator to observe the state of the world. Pepper compares the current state with the initial state seen before. It found no nails and asked for human assistance. Then, humans began to put down the missing nails. UR5 can continue to perform the repetitive task of inserting nails into corresponding holes.

Contrary to some simple skills that UR5 can perform, insertion skills use machine learning to find the direction of the hole by using force-torque sensors. It was trained through reinforcement learning using the learning API based on MaestROB cloud.

Figure 6 shows the MaestROB service running on each robot to complete the tasks defined in the demo scenario. Most services use cloud-based APIs to solve problems. It is important to note that in order to develop a UR5 plan, the Pepper robot must understand the skills available to the UR5 robot. This is done by sharing the UR5 skill database with Pepper. In the demo scene, Pepper is running an instance of Project Intu middleware. Intu makes the realization of speech-to-text (STT), text-to-speech (TTS), dialogue, perception, planning, etc. easy and simple. UR5 is running Pepper's plan to use MaestROB service on ROS.

Figure 6: Important services for running the demo. Services communicate through Intu's publish/subscribe model. The robot controller of UR5 is a ROS node and is connected to Intu through ROS

Figure 7: Screenshot of the demo video

In conclusion

This article provides a framework to support the next generation of robots to help solve problems that cannot be solved by traditional programming methods. The robot middleware (Project Intu) introduced in this article can now be used as an open source project. We also introduced some key services that allow us to demonstrate complex scenarios involving collaboration between multiple robots and humans. MaestROB is particularly useful for small and medium enterprises (SMEs), which require relatively fast time to market, frequent production line changes and output Low. Workers can communicate with robots in natural language and teach them new skills or perform existing skills.

As the future direction, we hope to share machine learning-based skills among multiple robots. As described in this article and demo, failed plans usually require human assistance. One of the future directions could be to make robots solve common problems on their own. We also plan to demonstrate a system that can understand written and oral instructions to create complex objects such as IKEA furniture.

Video results display

Click the link to watch the video:https://mp.weixin.qq.com/s/BM2yUrn4e07dgLwAiQtJyA

The intu framework in this article is open source and supports multiple platforms. Link: https://github.com/watson-intu/self

Interested friends can use their brains to try on their own robot platform, and we hope to see more successful cases of multi-robot skill sharing. The project involves many disciplines, such as natural language processing, visual recognition, robotic arm planning and other research points. The team has good research results and published papers. If you have any requirements for the platform and any robot equipment involved in the video, please feel free to contact us.

Reference original text:

Munawar A, De Magistris G, Pham TH, et al. Maestrob: A robotics framework for integrated orchestration of low-level control and high-level reasoning[C]//2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2018: 527-534.

As Japan's Softbank Robotics Company; Denmark Universal Robots Company; Canada Clearpath Company; South Korea ROBOTIS Company and many other internationally renowned intelligent robot companies authorized agent and the largest sales platform in China, it has become the most technological integration capability and after-sales service The strength of agents.

Inviting business partners

If you have more needs for purchase/cooperation, etc.

Welcome to contact Wuhan Jingtian Electric Co., Ltd.

Marketing Department: 18062020228, 18062020229

Phone: 027-87522899, 027-87522877

Official website: http://www.jingtianrobots.com

For more industry information, stay tuned to Jingtian Electric’s official WeChat account

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event