obile robots are currently spreading across the military, industrial, and civilian fields, and are still under continuous development. At present, mobile robot technology has made gratifying progress, and the research results are encouraging. With the development of sensor technology, intelligent technology and computing With the continuous improvement of technology, intelligent mobile robots can play more roles in production and life. So what are the mobile robot positioning technologies mainly involved? After summarizing, mobile robots currently mainly have the following 5 positioning technologies.

1. Ultrasonic navigation and positioning technology for mobile robots

The working principle of ultrasonic navigation and positioning is also similar to that of laser and infrared. Usually, the transmitting probe of the ultrasonic sensor emits ultrasonic waves. The ultrasonic waves encounter obstacles in the medium and return to the receiving device.

By receiving the ultrasonic reflection signal emitted by itself, and calculating the propagation distance S according to the time difference between the ultrasonic emission and the echo reception and the propagation speed, the distance from the obstacle to the robot can be obtained, that is, the formula: S=Tv/2 where T —The time difference between ultrasonic transmission and reception; v—the wave speed of ultrasonic propagation in the medium.

In the navigation and positioning of mobile robots, due to the defects of the ultrasonic sensor itself, such as specular reflection, limited beam angle, etc., it is difficult to fully obtain the surrounding environment information. Therefore, the ultrasonic sensing system composed of multiple sensors is usually used to establish The corresponding environment model transmits the information collected by the sensor to the control system of the mobile robot through serial communication. The control system then adopts certain algorithms to process the corresponding data according to the collected signals and the established mathematical model to obtain the position environment of the robot. information.

Since ultrasonic sensors have the advantages of low cost, fast information collection rate, and high range resolution, they have been widely used in the navigation and positioning of mobile robots for a long time. Moreover, it does not require complicated image equipment technology when collecting environmental information, so the ranging speed is fast and the real-time performance is good.

At the same time, ultrasonic sensors are not easily affected by external environmental conditions such as weather conditions, ambient light, shadows of obstacles, and surface roughness. Ultrasonic navigation and positioning have been widely used in the perception systems of various mobile robots.

2. Mobile robot visual navigation and positioning technology

In the visual navigation and positioning system, the navigation method of installing a camera in the robot based on local vision is more widely used at home and abroad. In this navigation method, control equipment and sensing devices are mounted on the robot body, and high-level decisions such as image recognition and path planning are all completed by the on-board control computer.

The visual navigation and positioning system mainly includes: camera (or CCD image sensor), video signal digitization equipment, fast signal processor based on DSP, computer and its peripherals, etc. Many robot systems now use CCD image sensors. The basic element is a line of silicon imaging elements. A photosensitive element and a charge transfer device are arranged on a substrate. Through the sequential transfer of charges, the video signals of multiple pixels are time-sharing and sequential. Take it out, for example, the resolution of the image collected by the area CCD sensor can range from 32×32 to 1024×1024 pixels.

The working principle of the visual navigation and positioning system is simply to optically process the environment around the robot. First use the camera to collect image information, compress the collected information, and then feed it back to a neural network and statistical method. The learning subsystem connects the collected image information with the actual position of the robot to complete the autonomous navigation and positioning function of the robot.

与此原文有关的更多信息要查看其他翻译信息,您必须输入相应原文

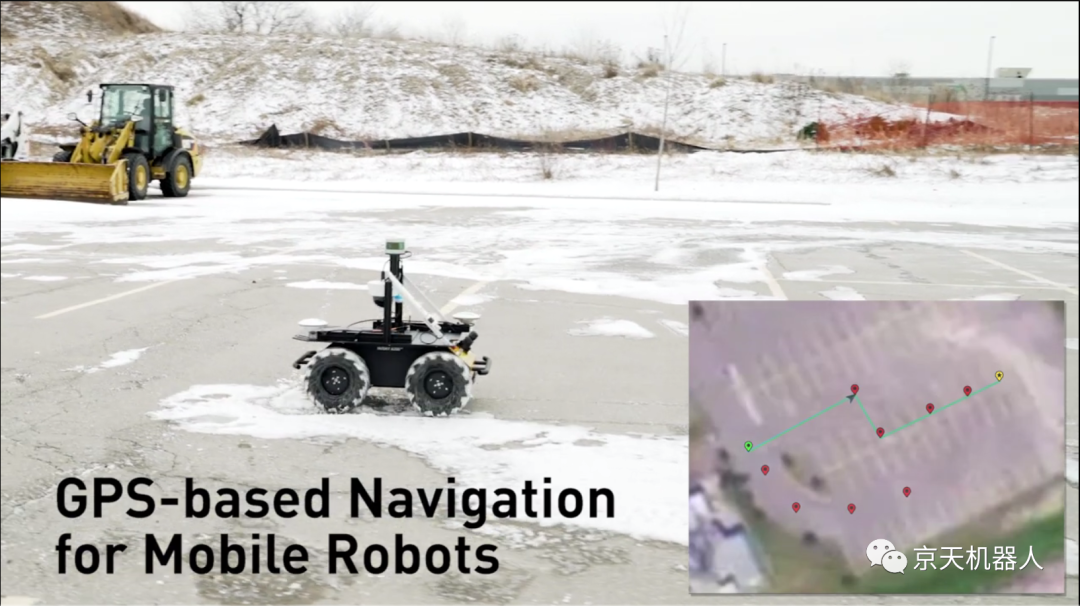

3. GPS Global Positioning System

Nowadays, in the application of intelligent robot navigation and positioning technology, the pseudo-range differential dynamic positioning method is generally used. The reference receiver and the dynamic receiver are used to observe 4 GPS satellites. According to a certain algorithm, the robot's position at a certain moment can be obtained. Three-dimensional position coordinates. Differential dynamic positioning eliminates star clock errors. For users who are 1000km away from the reference station, it can eliminate star clock errors and errors caused by the troposphere, which can significantly improve the accuracy of dynamic positioning.

However, because in mobile navigation, the positioning accuracy of mobile GPS receivers is affected by satellite signal conditions and road environment, as well as clock errors, propagation errors, receiver noise, and many other factors. Therefore, purely using GPS navigation has positioning accuracy. The problem of relatively low and low reliability, so in the navigation application of the robot, it is usually supplemented with the data of the magnetic compass, the optical code disc and the GPS for navigation. In addition, the GPS navigation system is not suitable for use in the navigation of indoor or underwater robots and robot systems that require high position accuracy.

4. Light reflection navigation and positioning technology for mobile robots

The typical light reflection navigation and positioning method mainly uses laser or infrared sensors to measure distance. Both laser and infrared use light reflection technology for navigation and positioning.

The laser global positioning system generally consists of a laser rotating mechanism, a mirror, a photoelectric receiving device, and a data acquisition and transmission device.

When working, the laser is emitted through the rotating mirror mechanism. When scanning the cooperative road sign composed of the retroreflector, the reflected light is processed by the photoelectric receiving device as a detection signal, and the data acquisition program is started to read the code disk data of the rotating mechanism ( The measured angle value of the target), and then transmitted to the upper computer through communication for data processing. According to the position of the known road sign and the detected information, the current position and direction of the sensor in the road sign coordinate system can be calculated, so as to achieve further navigation The purpose of positioning.

Laser ranging has the advantages of narrow beam, good parallelism, small scattering, and high resolution in the ranging direction. At the same time, it is also affected by environmental factors. Therefore, how to denoise the collected signals when using laser ranging is also an important issue. A relatively big problem. In addition, there are blind spots in laser ranging, so it is difficult to realize navigation and positioning by laser alone. In industrial applications, it is generally used in industrial field detection within a specific range, such as detecting pipeline cracks. .

Infrared sensing technology is often used in the multi-joint robot obstacle avoidance system to form a large area of robot "sensitive skin", covering the surface of the robot arm, which can detect various objects encountered during the operation of the robot arm.

A typical infrared sensor includes a solid-state light-emitting diode that emits infrared light and a solid-state photodiode that acts as a receiver. The infrared light-emitting tube emits the modulated signal, and the infrared photosensitive tube receives the infrared modulation signal reflected by the target. The elimination of ambient infrared light interference is guaranteed by signal modulation and special infrared filters. Assuming that the output signal Vo represents the voltage output of the reflected light intensity, Vo is a function of the distance between the probe and the workpiece: Vo = f (x, p) where p-the reflection coefficient of the workpiece. p is related to the color and roughness of the target surface. x—The distance between the probe and the workpiece.

When the workpiece is the same target with the same p value, x and Vo correspond one-to-one. x can be obtained by interpolating the experimental data of the proximity measurement of various targets. In this way, the position of the robot from the target object can be measured by the infrared sensor, and then the mobile robot can be navigated and positioned by other information processing methods.

Although infrared sensor positioning also has the advantages of high sensitivity, simple structure, low cost, etc., because of their high angular resolution and low distance resolution, they are often used as proximity sensors in mobile robots to detect approaching or sudden movements. Obstacles to facilitate emergency stop of the robot.

5. The current mainstream robot positioning technology is SLAM (Simultaneous Localization and Mapping).

Most of the industry-leading service robot companies have adopted SLAM technology. What exactly is SLAM technology? Simply put, SLAM technology refers to the complete process of positioning, mapping, and path planning for robots in an unknown environment.

SLAM (Simultaneous Localization and Mapping, real-time localization and map construction), since it was proposed in 1988, is mainly used to study the intelligentization of robot movement. For a completely unknown indoor environment, after equipped with core sensors such as lidar, SLAM technology can help the robot build an indoor environment map and help the robot walk autonomously.

The SLAM problem can be described as: the robot starts to move from an unknown location in an unknown environment, locates itself according to the position estimation and sensor data during the movement, and builds an incremental map at the same time.

The realization ways of SLAM technology mainly include VSLAM, Wifi-SLAM and Lidar SLAM.

1. VSLAM (Visual SLAM)

Refers to the use of depth cameras such as cameras and Kinect for navigation and exploration in an indoor environment. The working principle is simply to optically process the environment around the robot. First, use the camera to collect image information, compress the collected information, and then feed it back to a learning subsystem composed of neural networks and statistical methods. Then the learning subsystem links the collected image information with the actual position of the robot to complete the autonomous navigation and positioning function of the robot.

2. Wifi-SLAM

Refers to the use of multiple sensing devices in smart phones for positioning, including Wifi, GPS, gyroscope, accelerometer, and magnetometer, and drawing accurate indoor maps from the data obtained through algorithms such as machine learning and pattern recognition. The provider of this technology was acquired by Apple in 2013. Whether Apple has applied the Wifi-SLAM technology to the iPhone, so that all iPhone users are equivalent to carrying a small drawing robot, it is not yet known. Undoubtedly, more accurate positioning is not only beneficial to the map, it will make all applications that rely on geographic location (LBS) more accurate.

3. Lidar SLAM

Refers to the use of lidar as a sensor to obtain map data so that the robot can realize simultaneous positioning and map construction. As far as the technology itself is concerned, after years of verification, it has been quite mature, but the bottleneck problem of the high cost of Lidar needs to be solved urgently.

Google's driverless car is using this technology, and the lidar installed on the roof comes from the American company Velodyne. This lidar can emit 64 laser beams around when it rotates at high speed. The laser hits and returns to surrounding objects, and the distance between the car body and the surrounding objects can be calculated. The computer system then draws a fine 3D topographic map based on these data, and then combines it with the high-resolution map to generate different data models for use by the on-board computer system. Lidar accounts for half of the cost of the entire vehicle, which may be one of the reasons why Google's unmanned vehicles have been unable to be mass-produced.

与此原文有关的更多信息要查看其他翻译信息,您必须输入相应原文

Lidar has the characteristics of strong directivity, so that the accuracy of navigation is effectively guaranteed, and it can well adapt to the indoor environment.

Build a better positioning system for mobile robots

In the logistics industry, automated guided vehicles (AGVs) roam the factory floor to transport parts from one station to another. Large robots and AGVs can carry a variety of objects, but they usually lack high-precision positioning.

For example, AGVs usually use wheel code data (basically counting how many times the wheel turns) to determine its driving position and find its position. The problem is that if the robot is slipping in place (this is a common phenomenon, especially for differential steering robots such as Jackal), the position data becomes increasingly inaccurate.

For example, if the wheel slips when the robot puts a small part on the conveyor belt, low accuracy may cause the part to accidentally be placed too close to the side of the conveyor belt, slip off and break.

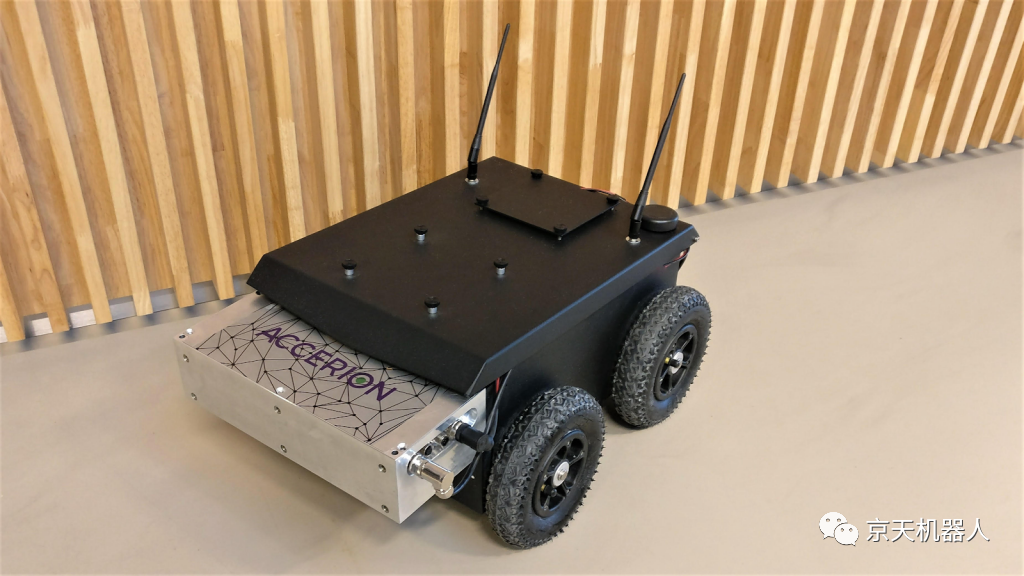

Accerion (https://accerion.tech/) mobile robot positioning method can achieve high-speed sub-millimeter positioning accuracy without any special infrastructure, thanks to the optical sensor scanning the ground while the robot is traveling. Accerion offers two types of sensors: Jupiter (an all-in-one positioning system) and Triton (a smaller unit that can be used as an add-on to an existing system).

In both cases, the camera image of the existing floor is taken and matched with the environment model to determine the position of the robot. Since the floor is directly viewed, pure displacement can be measured. It only takes two cables to integrate Accerion's sensors into Jackal to obtain indoor sub-millimeter positioning, which helps us to more accurately control mobile cars such as Jackal.

与此原文有关的更多信息要查看其他翻译信息,您必须输入相应原文

The Accerion company in the Netherlands was founded in 2015 and is committed to creating a positioning system for mobile robots. Their unique camera-based system can provide sub-millimeter positioning for AGVs and mobile robots such as Clearpath's Jackal UGV (Unmanned Ground Vehicle).

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event