1. Troubles encountered

I have encountered a problem recently. Everyone is talking about "autonomous navigation", but how autonomous is this "autonomy" of autonomous navigation? Is it completely autonomous?

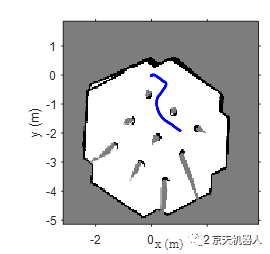

To answer this question, you must first recall the working mode of the mobile robot. Generally, a map of the environment is constructed first through the SLAM algorithm, and then autonomous navigation is started on this map.

In the process of autonomous navigation, it can be divided into

①Determine a target point first

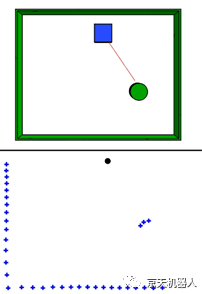

②The path planning algorithm plans the global path from the current position to the target point

③Finally track the global path, and then cooperate with the local dynamic obstacle avoidance algorithm to reach the target point

Among them, steps ② and ③ are completely autonomous, and step ① needs to be operated manually. You might say that this must be operated by humans, because robots have to serve humans, and of course they have to reach the "designated" target points according to the wishes of humans.

However, the difference between an intelligent machine and a tool machine is that an intelligent machine is a robot that accomplishes tasks in a human way and according to human wishes, such as chatting, companionship, education, and shopping guides, which are all human lifestyles. The tool-type machine requires humans to operate the machine in the same way as a machine to complete tasks, such as remote control, programming, driving, and keyboard input, all of which are machine methods.

According to this view, we tremblingly mark a target point for the autonomous navigation robot. This behavior is obviously that humans will instantly downgrade the robot to a tool-type machine in the same way as a machine. If you follow the human way, you should complete the task like this, just say: "Turtlebot, help me fetch a bottle of beer" instead of giving the coordinates of a beer bottle.

2. How to solve it?

First, recognize what I say into text, that is, from "speech" to "text", the robot voice interaction has been and basically realized. The step from "text" to "map coordinates" is really a bit difficult.

Because, the map we built in the first step is only the outline points where the tables, chairs, and walls in the room are recognized, and they are all regarded as "obstacles" in this map.

The points on the map are not connected with the names of the objects in the environment, so the robot has no concept of objects, and of course they can't see it, just like "Indians can't see Columbus's ship on the sea".

So the way to crack is to associate the points on the map with the labels of the objects in the scene. This solution is called "Semantic SLAM".

As the name implies, the map constructed in SLAM must include not only the obstacle information, but also the name of the scene object and the corresponding left information.

3. How to build a semantic map?

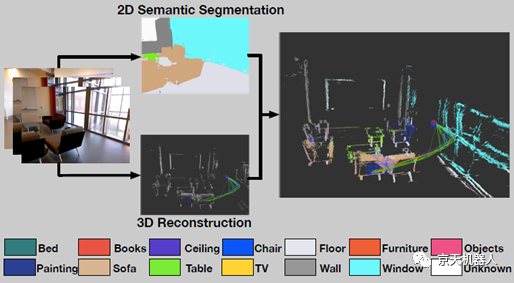

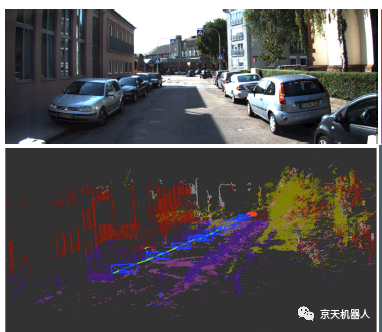

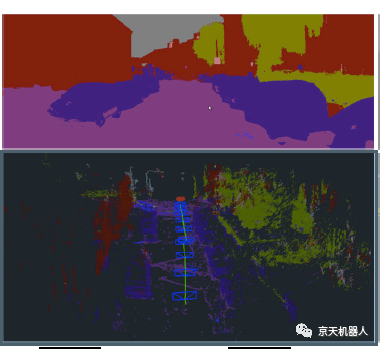

At present, the construction method of semantic map is generally to add a visual neural network to recognize the image objects observed by the camera while constructing the map by SLAM, and finally map the recognized tags to the map.

For example, the beer I want to drink may be just an area distributed according to a cylinder in the point cloud map. At the same time, after the visual neural network recognizes, it will mark the place where the beer bottle appears with a color block, and mark it as "beer"; finally, through the multi-view geometric projection, the color block of this beer is corresponding to the point cloud By mapping the regional coordinates, the map coordinates corresponding to the label "beer" are obtained.

In this way, when the robot hears the task of: I want a bottle of beer, it first recognizes what I say as the words "find" and "beer". Look for "beer" in all the tags on the map, and then get the point cloud area coordinates corresponding to the "beer" tag. Finally, set this "coordinate" as the navigation target point, and use the autonomous navigation method to reach this target. Cooperating with the hand-eye grasping of the robotic arm to complete the task, the robot can complete the autonomous navigation in the human way. (Do you think there is a better solution?)

4.Case analysis

Here we actually analyze the algorithm cases proposed in two papers, which are worth learning and reference:

Case 1: Dense 3D SLAM+DSS net

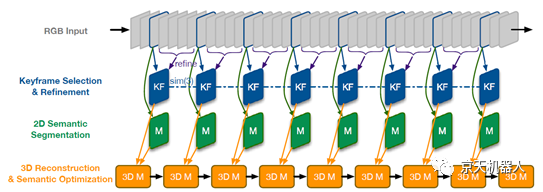

The method introduced in the paper [1] is to use RGB-D images as input, and then build a dense point cloud map based on the ElasticFusion SLAM [2] algorithm. For the mapping between maps and semantic labels, it is implemented through a "destroy convolutional semantic segmentation neural network" (DSS net), which inputs RGB images and depth information, and outputs labeled areas + labels.

Case 2: Semi-dense SLAM+DeepCNN net

In the paper [4], a conditional random field algorithm was first used to construct a semi-dense map. Then use Deep CNN to realize semantic label association.

Deep CNN is an end-to-end learning and reasoning process that uses an inverse convolutional layer, and then uses a maximum pooling layer and a deconvolutional layer to implement encoding and decoding.

5. Difficulty

It doesn't sound too difficult, so why isn't it widely used? Because there are a few places where the theory is easy to talk about, but the practice is too difficult:

1). The first is the occlusion problem. The neural network can identify an occluded area. But the mapped 3D point cloud will be "out of context". For example, a person who is obscured may only mark the head area, but the three-dimensional map is constructed with a whole body, so that it is not known where the body is during the mapping.

2). Secondly, our semantic recognition relies too much on the accuracy of the neural network to recognize the mark, but the current visual neural network has a very high recognition rate. But pixel-level "labeling" is far from reaching the accuracy we need. Perhaps we should consider integrating the semantic recognition process into the map point cloud construction process, instead of throwing this task directly to the visual neural network like the "handsman".

3). The other thing is that all of our above processes are "paper" analysis and a little bit of "laboratory" results. Put it on the actual robot, because the visual neural network has a huge demand for computing resources, it is difficult to apply to the embedded computing platform of the mobile robot. Actual robots need algorithms with less computational complexity so that they can be applied.

6. Reflection

It seems that my robot has not been able to install a viable "semantic comprehension device" so far, all researchers, come on. (There are methods, lack of optimization, are you jealous in this direction? There are many variables, the environment is complicated, and a lot of papers are published.)

But here I am thinking about another question. Is the robotics field an "academic" or a kind of "engineering"?

Because we always add "patches" when we encounter problems during the robot development process. If positioning is needed, add a positioning module and then import a positioning algorithm package; if identification is required, add an image module and recognition algorithm package. If you need a robotic arm, install one, and if you need both arms, install two. And the entire robot seems to be pieced together from various modules, playing like building blocks. If the robotic scholar is not dedicated to a certain module field (this field is strictly speaking only in this module), he will mobilize like a contractor. All kinds of other people's existing modules, I am very busy.

Just like before Einstein put forward the theory of relativity, everyone solved problems under Newton's three laws. But in the micro and macro, the calculation results are obviously in error. In order to remedy it, a bunch of "correction factors" will be added. Aren't these correction factors just like the various "functional patches" and "patchwork modules" we add to the robot? Until Einstein put forward the theory of relativity, he unified the macro and the micro under a concise and clear formula of relativity, so that it does not need to be repaired when applied.

The robotics field is also looking forward to the emergence of a theoretical or algorithmic framework like "relativity". Maybe we who are trapped in various patches will sneer at this point of view. How can it be possible that such a pile of fragmented algorithm packages can be unified under one thing?

Haha, maybe before Einstein, people who were caught up in the tinkering with Newton's theory also thought so. So, let's look forward to the early appearance of the "Einstein" in the field of robotics! Maybe it's you or me who was inexplicably selected by God at a certain moment, time is not for me.

references

1. J. McCormac, A. Handa, A. Davison, and S. Leutenegger.Semanticfusion: Dense 3d semantic mapping with convolutional neuralnetworks. arXiv preprint arXiv:1609.05130,2016.

2. T. Whelan, S. Leutenegger, R. F. Salas-Moreno, B. Glocker, and A. J. Davison. Elasticfusion: Dense slam without a pose graph. Proc. Robotics: Science and Systems, Rome, Italy, 2015.

3. Salas-Moreno R F. Dense Semantic SLAM[D]. Imperial College London, 2014.

4. Li X, Belaroussi R. Semi-dense 3d semanticmapping from monocular slam. arxiv 2016[J]. arXiv preprint arXiv:1611.04144.

5. Wang Z, Zhang Q, Li J, et al. A computationallyefficient semantic slam solution for dynamic scenes[J]. Remote Sensing, 2019,11(11): 1363.

6. Himri K, Ridao P, Gracias N, et al. SemanticSLAM for an AUV using object recognition from point clouds[J].IFAC-PapersOnLine, 2018, 51(29): 360-365.

Original statement: This document is an original document. Welcome to forward and reprint quotes, please indicate the source.

与此原文有关的更多信息要查看其他翻译信息,您必须输入相应原文

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event